Face-Based Image Sorter

The Idea

As someone who regularly trains and fine-tunes custom image models (LoRA, ControlNet, etc.), I hit a recurring wall: Dataset Curation. Sorting thousands of images for a specific subject or character is the most time-consuming part of the pre-training stage. Manual sorting is prone to error, and cloud-based tools are a privacy risk for private datasets.

I built this tool to automate the "Data Cleaning" phase of the ML pipeline. By utilizing InsightFace for high-accuracy embeddings and DBSCAN for unsupervised clustering, I created a way to take a raw, 50,000-image dump and instantly isolate high-quality training data for specific individuals, all while keeping the data 100% offline.

System Workflow

The sorter operates as a 4-stage pipeline, using a local SQLite database to track progress and ensure the process is fully resumable.

Technical Workflow

- Scan & Hash: Recursively indexes the directory. It uses file path hashing to skip duplicates, ensuring that redundant images don't dilute the training set.

- Batch Extraction: Utilizes the InsightFace (buffalo_l) model to detect faces and generate 512-dimensional feature vectors. I implemented background preloading to ensure the GPU/CPU never idles during the extraction of 50k+ vectors.

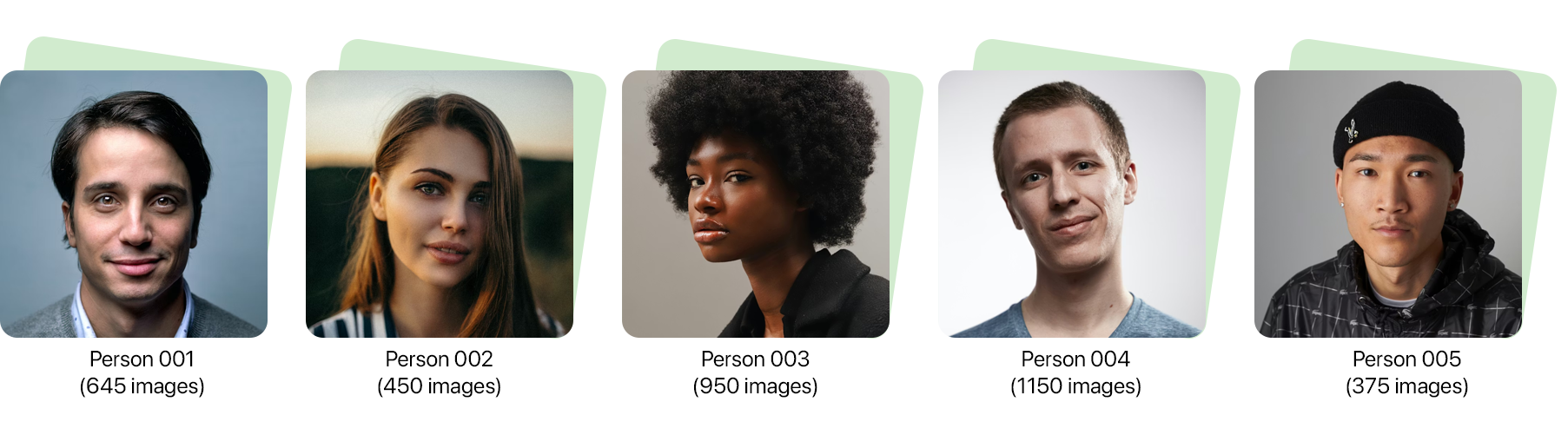

- Identity Clustering: Instead of manual tagging, the system uses DBSCAN (or HDBSCAN). This is critical for ML workflows because it discovers "identities" autonomously, allowing me to find a specific person in a sea of noise without knowing their name.

- File Organization: The backend translates the mathematical clusters into a physical folder structure. This allows for immediate integration into training GUIs like Kohya_ss or OneTrainer.

Reflection

This project turned a manual 6-hour task into a 30-minute automated background process. It proved that in the world of AI training, the "Humanities" of the data, how we organize and curate it, is just as important as the model architecture itself. The efficiency gained here directly translates to faster iteration cycles and higher-quality model weights.

What worked

- Dataset Readiness: The output is immediately usable for training. By sorting "unknowns" and "no-faces" into separate bins, the "garbage" is automatically filtered out of the training set.

- Scalability: By processing in batches and storing only embeddings (not pixels) in RAM, the system remains stable even when the dataset grows to six figures.

- Privacy-First: Since many training datasets contain private or sensitive imagery, the 100% local execution is a non-negotiable feature.

Limitations

- Quality Variance: While the AI groups faces well, it doesn't yet filter for "low-quality" or "blurry" images—meaning a second manual pass for aesthetic quality is still needed before training.

- Computational Intensity: Extracting 50,000 embeddings is a heavy lift for a CPU. For ML practitioners, using the CUDA-accelerated GPU path is almost mandatory for speed.

How to Use

- Clone the repository and create a Python virtual environment.

- Install dependencies:

pip install -r requirements.txt.

- Point

INPUT_IMAGE_DIRinconfig.pyto your raw training dataset. - Set

USE_GPU = Truefor 10x faster processing if you have an NVIDIA GPU.

python scan_images.py(Indexes your raw data)python extract_embeddings.py(The AI identifies the subjects)python cluster_faces.py(Groups subjects into training folders)python organize_folders.py(Physicalizes the folders for your training GUI)

Links

GitHub Repository: calluxpore/Local-Offline-Face-Based-Image-Sorter